Hey everyone,

Looking at satellite images all of last Saturday reminded me a lot about playing SimCity, so I decided to pick it up again over the weekend. I don’t play video games that much (at least not consistently), and sometimes I’ll abandon a game for months or years because I’ll have something better to do. For SimCity, the cycle proceeds as follows:

- Buy the game, quit in frustration after a month because I can’t get my city to grow

- Come back a few months later, finally get the city to grow a little, and then quit in frustration when its gets destroyed by an earthquake

- Come back a few months later, get a little better, then quit because summer’s over and I have to go back to school

- Come back after a couple of years, finally get good at the game, only to learn that a new version’s about to come out

- Purchase the new game, repeat…

Not long after I got my region to finally grow past 300,000 citizens, I learned that the next iteration in the franchise, SimCity 5, will be released sometime next year. I’m really excited about this next version since the current one’s been around for almost 10 years (despite that, the graphics have aged really well). Some of the early screenshots looked unpleasantly cartoonish and lacking in detail, but the artwork’s gotten better after each press release, so hopefully the game delivers.

Anyway, I’d like to get the population of my region past one million before next year, since I haven’t done that in any of the game’s previous versions (except by using arcologies in SC2). Here’s a snapshot of what I have so far:

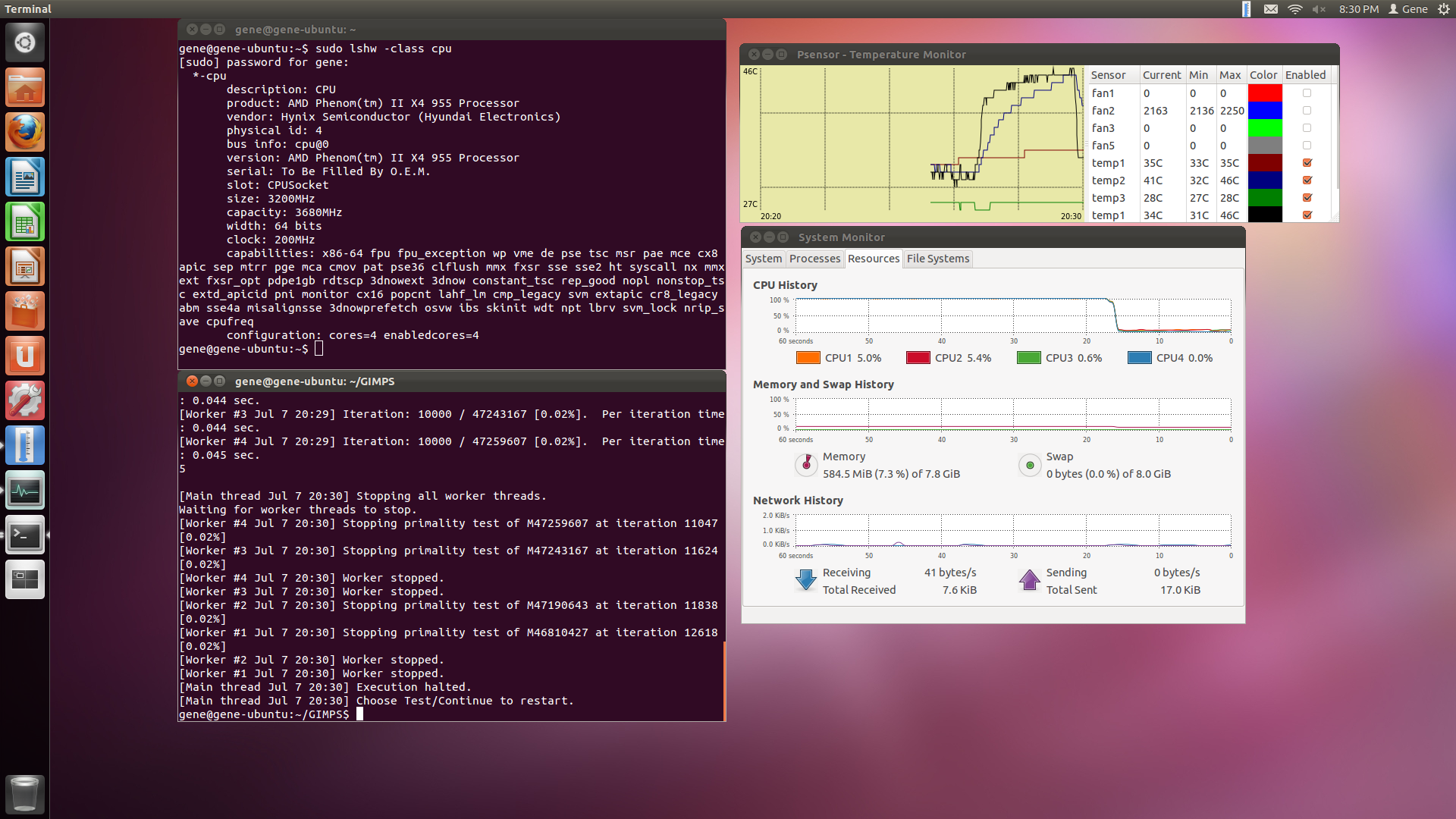

This image represents a small portion of a huge terrain map that I downloaded from the Simtropolis community. Back in 2003, when the game first came out, I kept running out of room with the stock regions so I decided to get something bigger this time around. Here you see a cluster of 4 cities connected by 2 bridges spanning a river. Here’s a snapshot of Sector 0, the oldest and largest city in the region:

And here’s a closeup shot of downtown:

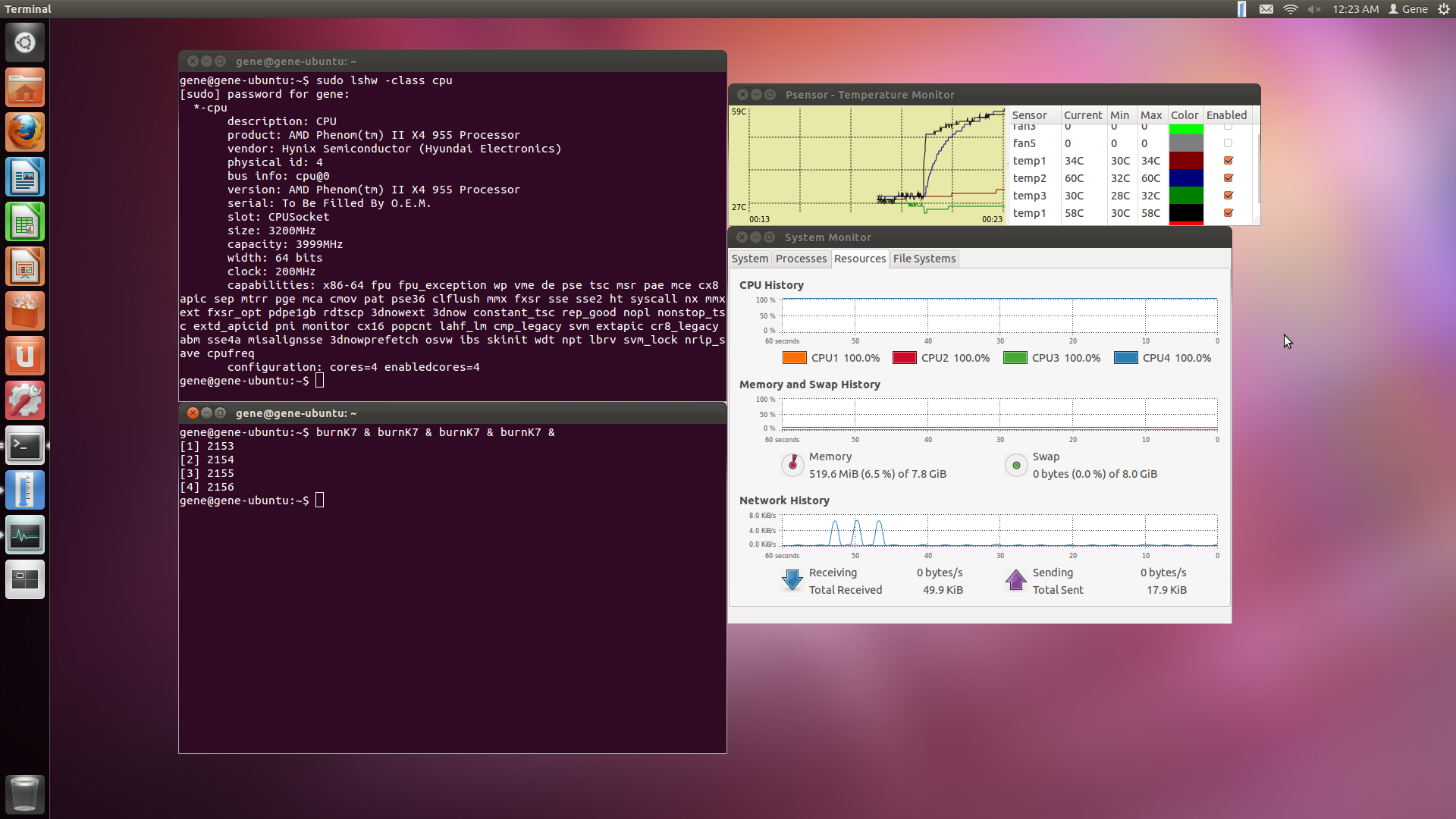

So basically, the idea of the game is to do whatever you want with your city as long as it doesn’t go bankrupt. For beginners, the hardest part is balancing the budget. In past versions, as soon as you mastered the budget, you pretty much mastered the rest of the game. However, in SC4 the additional task of traffic micromanagement made it difficult for even seasoned players to expand their cities. It partially wasn’t the players’ fault, as fans discovered a bug in the traffic engine that caused citizens to only pick the shortest, but not the fastest routes for their commutes. This wasn’t discovered until the late 2000s, several years after SC4 had a chance to make an impact. I think this is one of the reasons why so many people found the game frustrating, and may have been a contributing factor in EA’s decision to not create another version until 2013. Fortunately, a network modification tool has since fixed the problem, making it much easier to manage traffic:

The above image shows shows highway usage in the northeast section of Sector 0. This highway connects people living in the suburbs to the downtown area and the neighboring city to the east. In previous versions, you only needed to connect your roads and your city would grow – however, in the current version you have to consider capacity, speed, noise, and distance of the various modes of transportation – otherwise, the city will fail to grow. I think the developers purposely made this the most important part of the game.

Eventually, I’d like to get into modding, since apparently EA/Maxis plans to make SC5 “fully moddable” (whatever that means). I’ve heard that the new Glassbox engine has a more realistic economic simulator, and I’d really like to see how it works if the developers open up the design. Unfortunately their last attempt at rebooting the SC franchise (SC Societies) failed miserably, and it looks like SC Social will fail shortly…I suppose in the worst case I’ll at least have SC4.