Hey everyone,

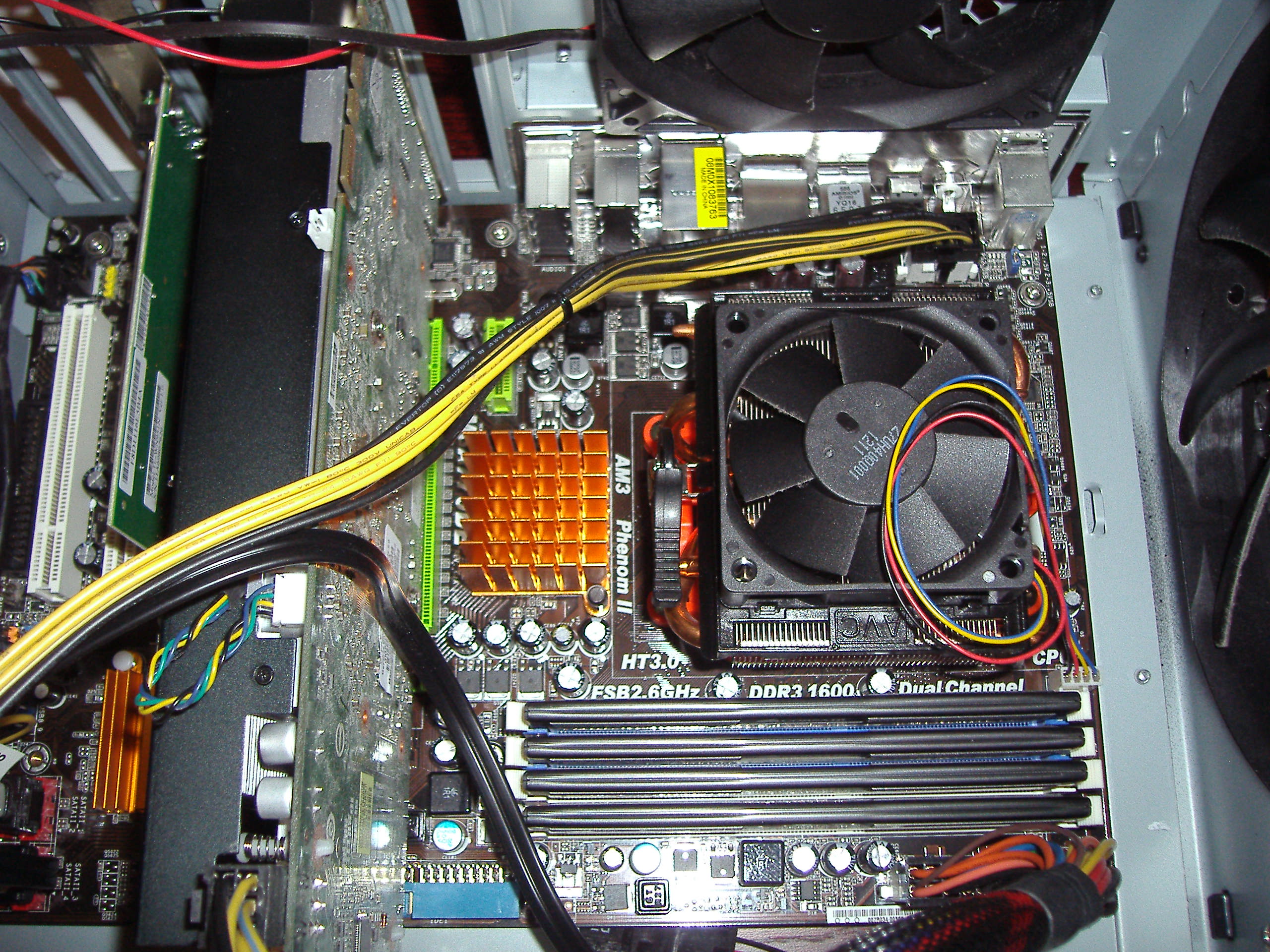

Last week I wrote about liquid cooling and overclocking my Linux server. I spent that Sunday mostly fiddling around with the CPU multiplier and voltage settings, but I didn’t subject the machine to any lengthy stress testing because I mainly wanted to see how high I could safely overclock the core. My friend Daniel told me that if I wanted to truly test the stability of a particular overclock setting, I’d have to test the computer over the course of several hours to make sure the programs ran correctly and that no wild temperature fluctuations took place. Furthermore, I’d have to run two separate batteries of tests – (one with the AC on and one without) to make sure that the machine wouldn’t overheat without air conditioning.

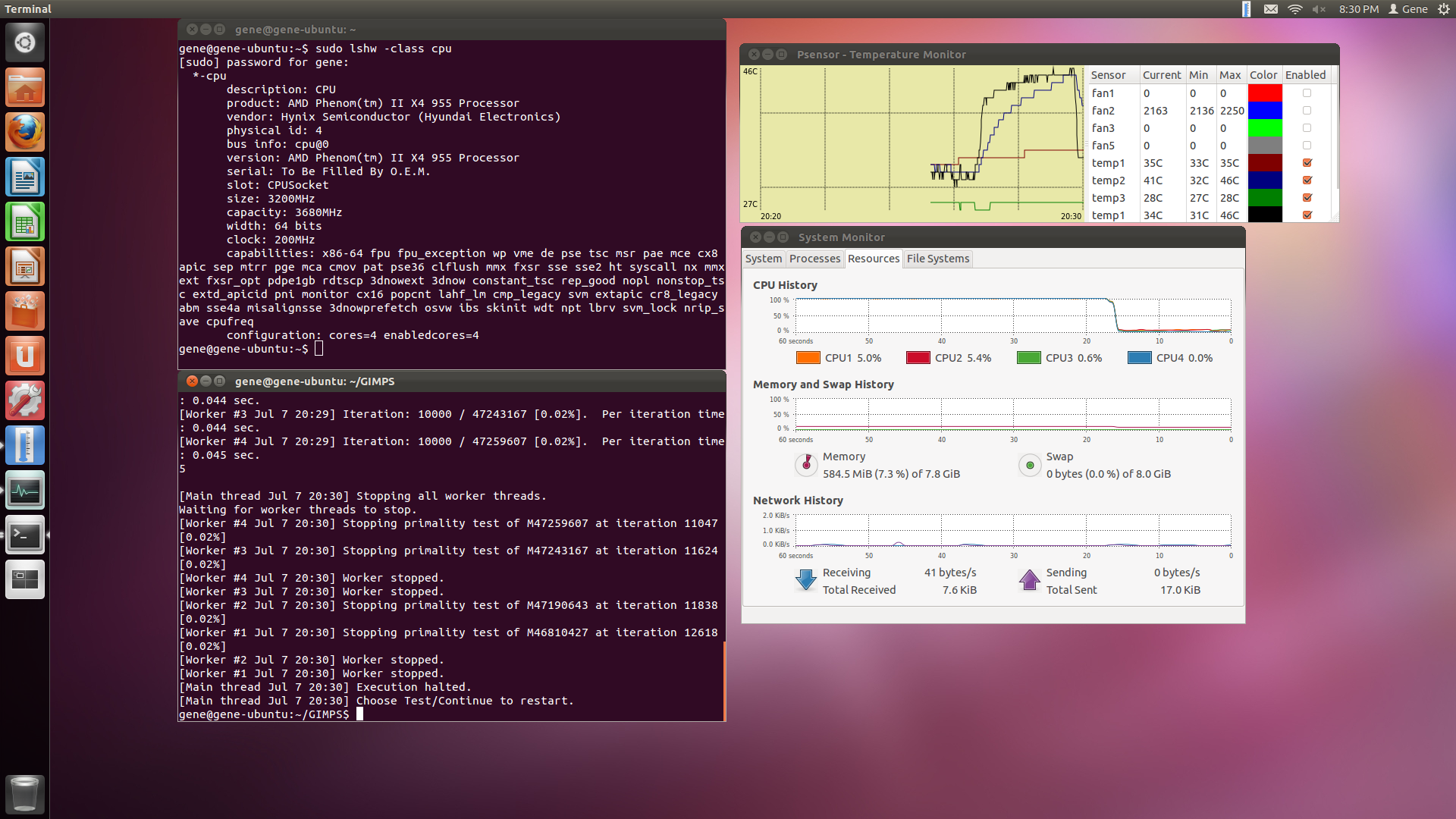

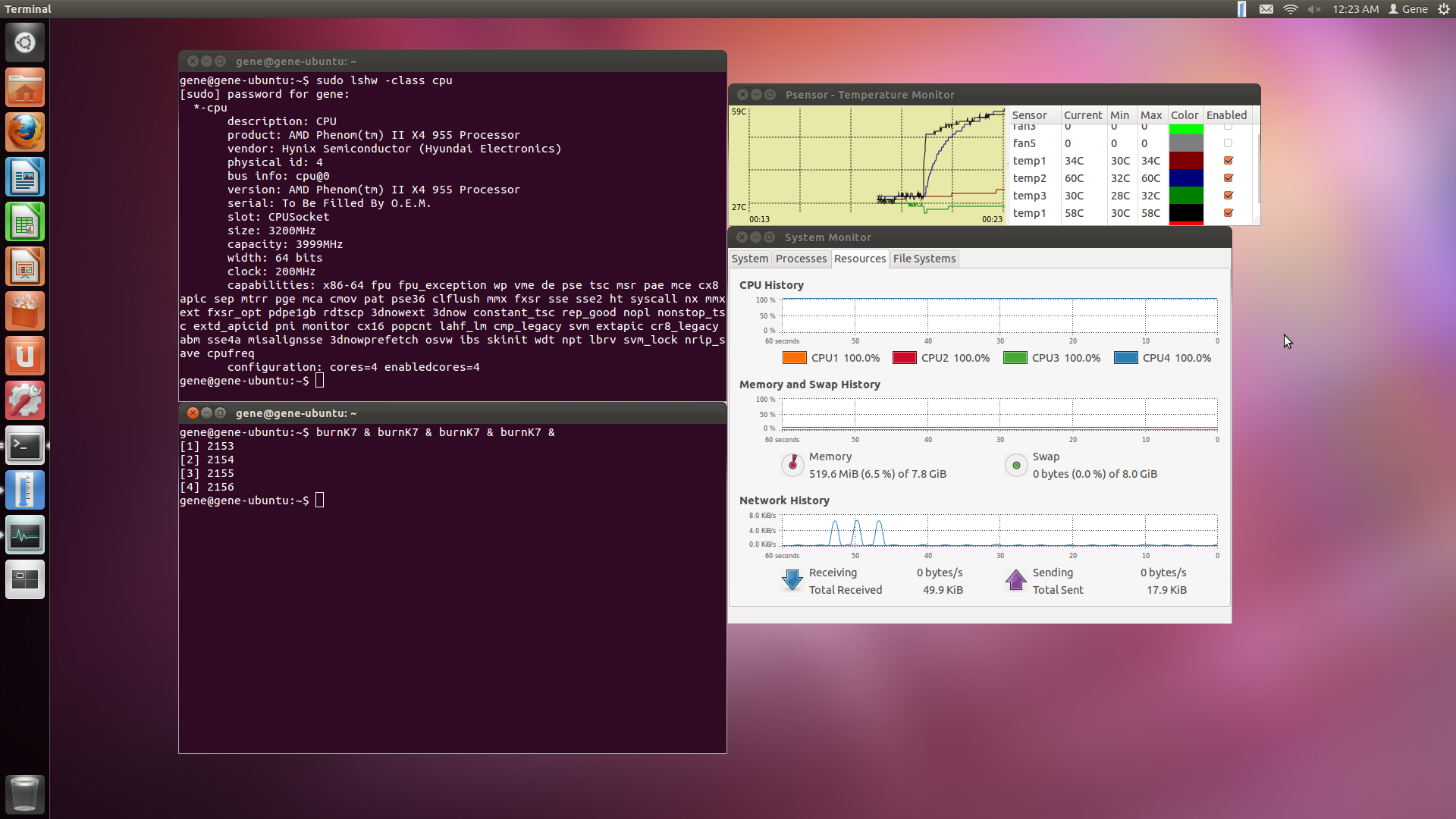

Unfortunately, I couldn’t complete the entire experiment because it rained almost every day last week (and will continue to rain each day this week), which meant that the temperatures wouldn’t be hot enough outside (hence, inside) to test the machine under summer conditions. However, I still had the opportunity to see how the computer would operate in cool conditions, which I had originally intended to do as a control. Thus, I decided to test the CPU using GIMPS at 5 clock settings: 3200 MHz, 3360 MHz, 3519 MHz, 3680 MHz, and 3840 MHz – with 3200 MHz as the stock setting.

The test was simple. I’d first use the terminal to dump the motherboard’s temperature readings into a text file, run GIMPS over the course of a workday (at least 9 hours), and then import the results of the test into an Excel spreadsheet to compare the results. I was able to find some code on how to make the text file by searching ubuntuforums.org, from which I used the following loop to log the temperatures each minute over the course of each test:

while true; do sensors >> log.txt; sleep 60; done

Which logged the following output each minute into a text file:

w83627dhg-isa-0290

Adapter: ISA adapter

Vcore: +1.04 V (min = +0.00 V, max = +1.74 V)

in1: +0.00 V (min = +0.06 V, max = +1.99 V) ALARM

AVCC: +3.28 V (min = +2.98 V, max = +3.63 V)

+3.3V: +3.28 V (min = +2.98 V, max = +3.63 V)

in4: +1.84 V (min = +0.43 V, max = +1.28 V) ALARM

in5: +1.70 V (min = +0.66 V, max = +0.78 V) ALARM

in6: +1.64 V (min = +1.63 V, max = +1.86 V)

3VSB: +3.49 V (min = +2.98 V, max = +3.63 V)

Vbat: +3.44 V (min = +2.70 V, max = +3.30 V) ALARM

fan1: 0 RPM (min = 2636 RPM, div = 128) ALARM

fan2: 2163 RPM (min = 715 RPM, div = 8)

fan3: 0 RPM (min = 1757 RPM, div = 128) ALARM

fan5: 0 RPM (min = 2636 RPM, div = 128) ALARM

temp1: +27.0°C (high = +0.0°C, hyst = +100.0°C) sensor = thermistor

temp2: +27.0°C (high = +80.0°C, hyst = +75.0°C) sensor = thermistor

temp3: +32.0°C (high = +80.0°C, hyst = +75.0°C) sensor = thermistor

k10temp-pci-00c3

Adapter: PCI adapter

temp1: +27.5°C (high = +70.0°C)

radeon-pci-0200

Adapter: PCI adapter

temp1: +55.5°C

You can see that the above output is quite cryptic – and it took me a while searching the forums until I found out that the CPU reading was denoted by “k10temp-pci-00c3.” Because the loop recorded these temperatures every minute, I was able to use the fact that each temperature reading repeated every 27 lines to write a loop in VBA and extract these readings into an Excel spreadsheet:

Option Explicit

Sub import_temperatures()

Dim r As Long, m As Long

Dim temperature As String

Range("A2:E1000000").Clear

Open "C:UsersGeneDesktop3840.txt" For Input As #1

r = 1

m = 0

Do Until EOF(1)

Line Input #1, temperature

If r = 16 Or ((r - 16) Mod 27 = 0) Then

Range("A2").Offset(m, 0).Value = Right(Left(Trim(temperature), 19), 4)

ElseIf r = 17 Or (r - 17) Mod 27 = 0 Then

Range("B2").Offset(m, 0).Value = Right(Left(Trim(temperature), 19), 4)

ElseIf r = 18 Or (r - 18) Mod 27 = 0 Then

Range("C2").Offset(m, 0).Value = Right(Left(Trim(temperature), 19), 4)

ElseIf r = 22 Or (r - 22) Mod 27 = 0 Then

Range("D2").Offset(m, 0).Value = Right(Left(Trim(temperature), 19), 4)

ElseIf r = 26 Or (r - 26) Mod 27 = 0 Then

Range("E2").Offset(m, 0).Value = Right(Left(Trim(temperature), 19), 4)

m = m + 1

End If

r = r + 1

Loop

Close #1

End Sub

The test took 5 days to complete, so I had to be patient. Here are the results:

You can see that the results are very impressive. At stock settings, the CPU temperature hovered at around 45 degrees Celsius at 100% effort. This means that I can leave the computer on all day and even the most intensive task won’t push the temperature past 50 degrees (or not even past 47 degrees). Even at 3840 MHz, the temperature stayed at around 55 degrees Celsius over the course of 9 hours. I did however, have to increase the voltage for clock speeds of 3519 MHz and above, so I’m not sure if the temperature increases beyond that speed were due to voltage increases, multiplier increases, or a combination of both. Moreover, I’m not sure if the increased clock speeds made GIMPS run any faster, since the per-iteration time seems to depend on which exponent you are testing (I’m sure there’s a way, though). Nevertheless, I’m very satisfied with the results and the ability of the liquid cooling system to keep temperatures stable while I’m away from home.