Hey everyone,

A while back I wrote about a Linux server I set up in order to do statistical work remotely from other computers. So far, I haven’t done much with it other than learn R and LaTeX, but recently I’ve discovered that it would be a great tool to document some of the algorithms I’ve developed through my modeling projects at work in the event that I would ever need to use them again (highly likely). Back in January, I wrote that I was concerned about the CPU getting too hot since I left it on at home while I was away at work. Since I leave the AC off when I’m gone, the air going into the machine would be hotter and would hinder the cooling ability of the server’s fans.

I could leave the AC on, but that wouldn’t be environmentally friendly, so I’ve been looking for other solutions to keep my processor cool. One of the options I decided to try was liquid cooling – which I heard was more energy efficient and better at cooling than traditional air cooling found on stock computers. Moreover, I had seen some really creative setups on overclockers.net – which encouraged me to try it myself. To get started, I purchased a basic all-in-one cooler from Corsair. This setup isn’t as sophisticated as any of the custom builds you’d see at overclockers, but it was inexpensive and I thought it would give me a basic grasp on the concept of liquid cooling.

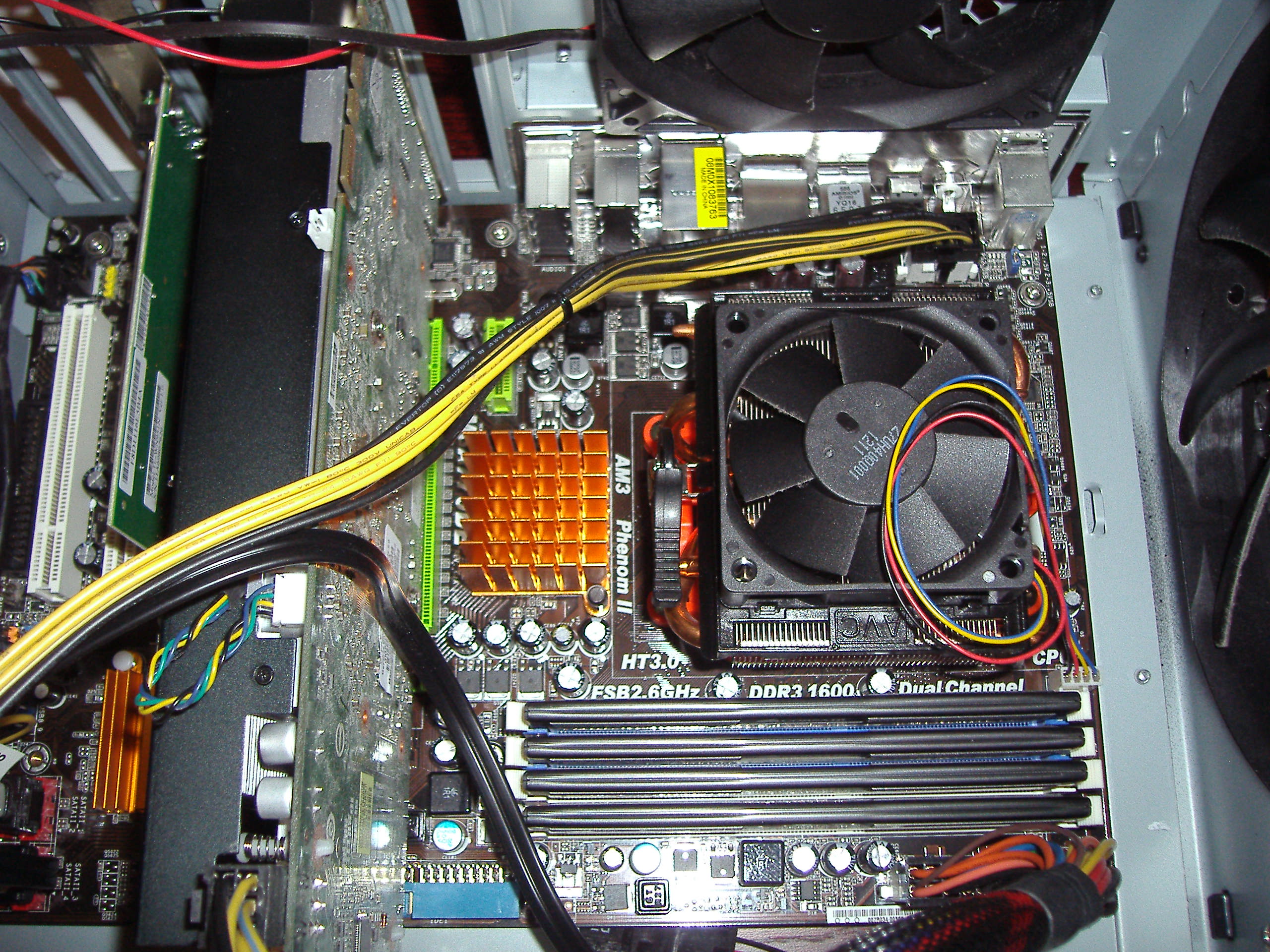

The installation was pretty easy – all I had to do was remove the old heatsink and screw in pump/waterblock into the CPU socket. Then, I attached the 2 x 120 mm fans along with the radiator to the back of the case:

However, one of the problems with these no-fuss all-in-one systems is that you can’t modify the hose length, which might make the system difficult or impossible to install if your case is too large or too small. As you can see, I got lucky – the two fans along with the radiator barely fit inside my mid-tower Antec 900 case. If it were any smaller the pump would have gotten in the way and I would have had to remove the interior fan to make it fit. Nevertheless, I’m really satisfied with the product – as soon as I booted up the machine I was impressed by how quietly it ran.

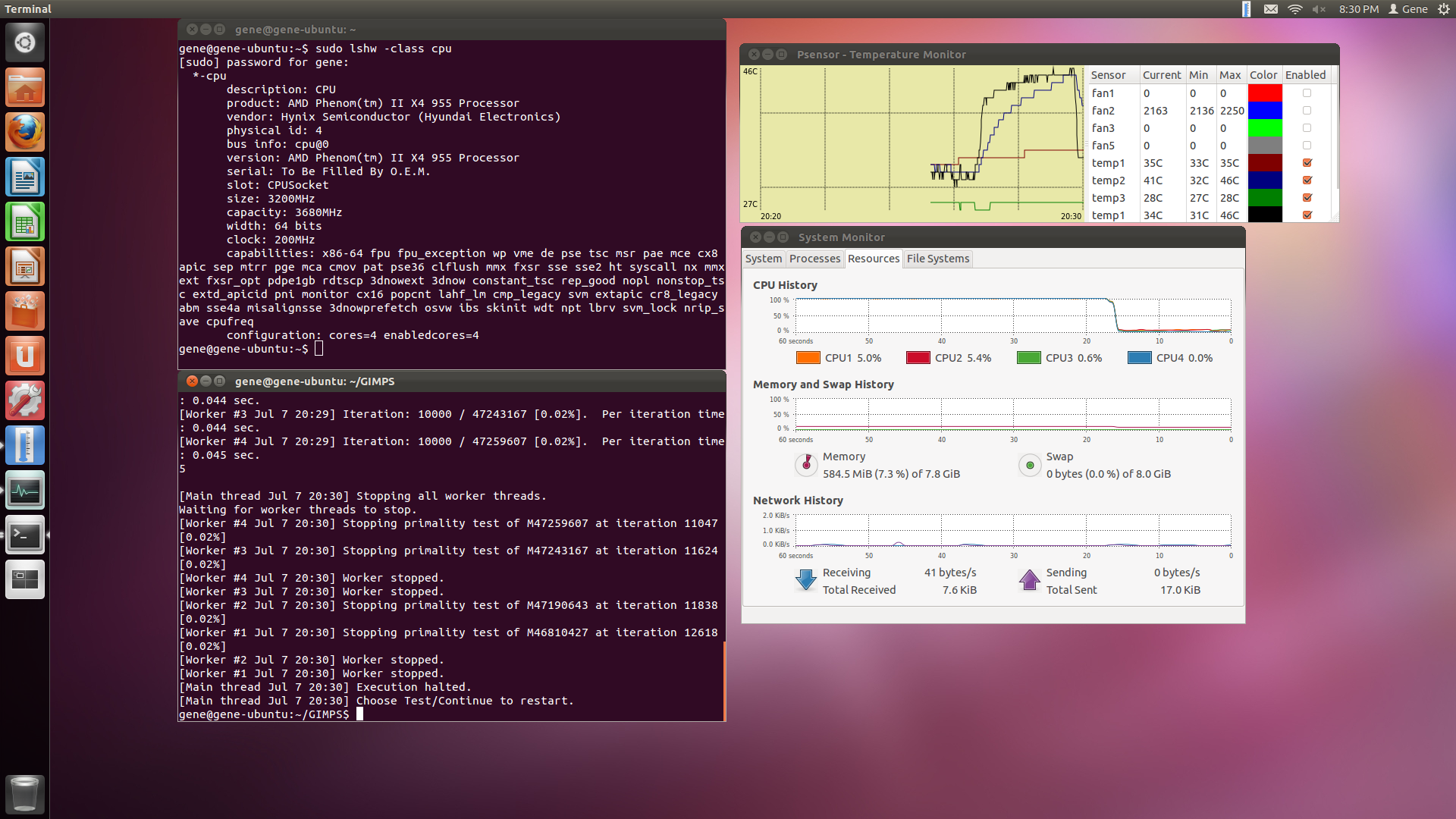

Naturally, I decided to overclock the processor to test the effectiveness of the new cooling system. I increased the clock speed of the CPU (AMD Phenom II) from 3200 MHz to 3680 MHz and ran all 4 cores at 100% capacity to see how high temperatures would get. Here are the results below:

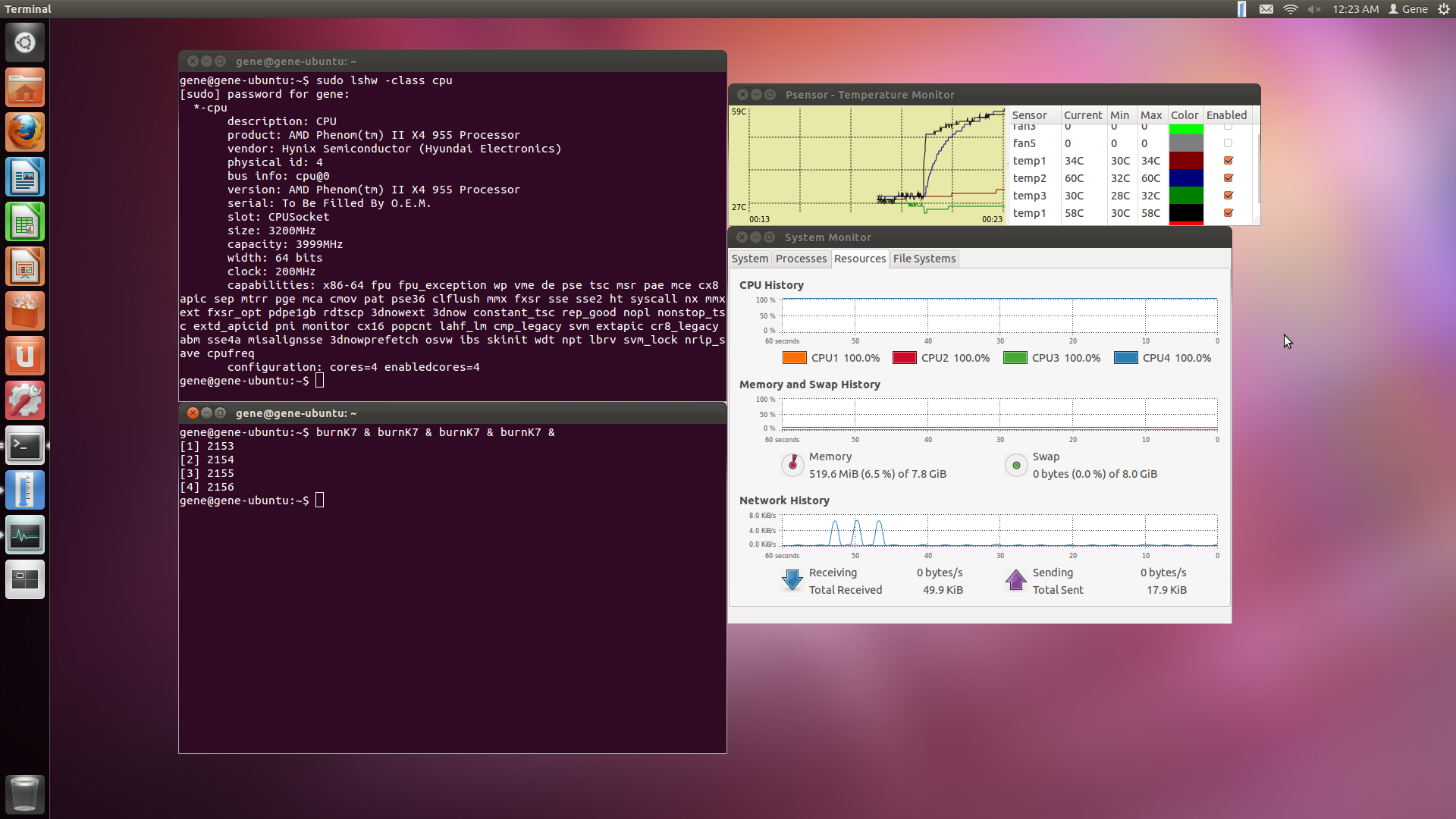

You can see that the maximum temperature was just 46 C – that’s pretty cool for an overclocked processor. I only ran the test for a few minutes because I had been steadily increasing the clock speed little by little to see how far it could go. The test ran comfortably at 3519 MHz, but as soon as I reached 3680 MHz the computer started having issues with booting up. I was able to reach 3841 MHz by increasing the voltage to 1.5 V and 3999 MHz by increasing the voltage to 1.55 V. I was somewhat disappointed because I couldn’t get the clock speed to surpass 4 GHz (as the Phenom II has been pushed to much higher clock speeds with more sophisticated cooling techniques). At this point I couldn’t even run mprime without having my computer crash, but I was able to continue the stress testing by using BurnK7:

You can see that the core temperature maxed out at 60 C, so I’m pretty sure I could have pushed it a little further. However, the machine wouldn’t even boot up after I increased the multiplier, so I called it a day. I contacted my friend Daniel Lin (who had been overclocking machines since middle school) with the results, and he responded with his own stress test using an Intel Core i7 quad core:

The impressive part is he was able to reach 4300 MHz using nothing but stock voltages (1.32 V) and air cooling. He told me that I had an inferior processor and I believe him (then again, you get what you pay for – the Intel i7 is three times more expensive). If he had liquid cooled his computer he probably could have pushed it even further. Anyway, Daniel told me that you can’t be sure if an overclock is truly stable unless you stress test it over the span of several hours. So, I decided that my next task would be to get Ubuntu’s sensors to output its readings into a text file while I run mprime over the course of 24 hours. I’d also like compare temperature readings depending on whether or not the AC is turned on while I’m away at work. I’ll have the results up next week (hopefully).